NFS is supposed to be a very simple and fast network file protocol. However, when I tried to use it on my Xen box between a Debian Squeeze DomU and an NFS server running on the Debian Squeeze Dom0, I noticed that write performance was abysmal: any write more than a couple KB in size would not only slow down to a crawl, but also bog down the DomU, making it rather difficult to even cancel the write.

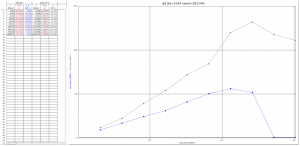

After some researching and testing, I tracked it down to the rsize and wsize mount options: they specify the size of the chunks sent at a single time. Apparently, they are set to 1M if you don’t specify anything else. In my case, wsize=131072 and rsize=262144 showed the highest write and read speeds respectively. However, wsize=131072 is not too far away from the cliff after which writing drops to a crawl, so I decided to back it down to 65536.

Hello,

Can you provide me the exact dd command lines you used for your test ?

Using different oflag options give different results.

I found that conv=fdatasync is best for write but I can’t figure out what’s the best for read (maybe oflag=direct).

Thanks,

Fred

I didn’t add any oflag or conv parameters to dd. I just ran dd id=/dev/zero of=test.img bs=16M if I remember correctly.