I tend to set the CPU pinning for my OpenMPI programs to the NUMA node. That way, they always access fast local memory without having to cross between processors. Some recent CPUs like the AMD Ryzen Threadripper have multiple NUMA nodes per socket, so pinning to the socket is not the same thing.

Since upgrading to Ubuntu 20.04, we were seeing error messages like this:

$ python3 -m mpi4py.bench helloworld -------------------------------------------------------------------------- It looks like orte_init failed for some reason; your parallel process is likely to abort. There are many reasons that a parallel process can fail during orte_init; some of which are due to configuration or environment problems. This failure appears to be an internal failure; here's some additional information (which may only be relevant to an Open MPI developer): Setting processor affinity failed failed --> Returned value Error (-1) instead of ORTE_SUCCESS --------------------------------------------------------------------------

Launching through mpiexec/mpirun, even if it was with just one MPI rank, did not show the error:

$ mpiexec -n 1 python3 -m mpi4py.bench helloworld Hello, World! I am process 0 of 1 on host1. $ mpirun -n 1 python3 -m mpi4py.bench helloworld Hello, World! I am process 0 of 1 on host1. $ mpiexec -n 4 python3 -m mpi4py.bench helloworld Hello, World! I am process 3 of 4 on host1. Hello, World! I am process 0 of 4 on host1. Hello, World! I am process 1 of 4 on host1. Hello, World! I am process 2 of 4 on host1.

If you look through the OpenMPI code, you can see that CPU pinning is done by different code depending on whether you run standalone (called singleton mode) or through mpiexec. The relevant bit for the former is in ess_base_fns.c. It searches for a hwloc object of type HWLOC_OBJ_NODE (which is deprecated on the hwloc side and identical to the newer HWLOC_OBJ_NUMANODE). Since hwloc 2.0, NUMA nodes are no longer containers for CPU cores, but exist besides them inside a HWLOC_OBJ_GROUP.

$ lstopo --version

lstopo 1.11.9

$ lstopo --output-format console

Machine (31GB total) + Package L#0

NUMANode L#0 (P#0 16GB)

L3 L#0 (8192KB)

L2 L#0 (512KB) + L1d L#0 (32KB) + L1i L#0 (64KB) + Core L#0

PU L#0 (P#0)

PU L#1 (P#12)

[...]

$ lstopo --version

lstopo 2.1.0

$ lstopo --output-format console

Machine (31GB total) + Package L#0

Group0 L#0

NUMANode L#0 (P#0 16GB)

L3 L#0 (8192KB)

L2 L#0 (512KB) + L1d L#0 (32KB) + L1i L#0 (64KB) + Core L#0

PU L#0 (P#0)

PU L#1 (P#12)

[...]

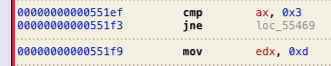

The current OpenMPI master (i.e. versions beyond the 4.1.x series) don’t bind through hwloc anymore, so the issue is fixed upstream (if only by accident). However, we’re stuck with Ubuntu 20.04 for the next two years, so let’s fix it ourselves. We load up the incriminating file, /usr/lib/x86_64-linux-gnu/openmpi/lib/libopen-rte.so.40.20.3, in Hopper and jump to orte_ess_base_proc_binding. Comparing it to its C code quickly reveals the instruction we need to change:

0x3 is OPAL_BIND_TO_NUMA and 0xd is HWLOC_OBJ_NODE. Looking at the hex code tells us that we need to make this change:

- 66 83 F8 03 0F 85 70 02 00 00 BA 0D 00 00 00

+ 66 83 F8 03 0F 85 70 02 00 00 BA 0C 00 00 00

Here’s a bit of Python code to do that:

import mmap

with open("/usr/lib/x86_64-linux-gnu/openmpi/lib/libopen-rte.so.40.20.3", 'r+b') as f:

m = mmap.mmap(f.fileno(), 0, access=mmap.ACCESS_WRITE)

m.seek(m.find(bytes.fromhex("66 83 F8 03 0F 85 70 02 00 00 BA 0D 00 00 00")))

m.write( bytes.fromhex("66 83 F8 03 0F 85 70 02 00 00 BA 0C 00 00 00"))

Update 2021-02-26

The recent kernel update from 5.4.0.65 to 5.4.0.66 switched us from HWLOC_OBJ_GROUP to HWLOC_OBJ_DIE. lstopo now reports

$ lstopo --output-format console Machine (31GB total) + Package L#0 Die L#0 NUMANode L#0 (P#0 16GB) L3 L#0 (8192KB) L2 L#0 (512KB) + L1d L#0 (32KB) + L1i L#0 (64KB) + Core L#0 PU L#0 (P#0) PU L#1 (P#16) [...]

So the patch needs to be modified to have 0x13 in its fourth-to-last byte now.

Update 2021-05-07

The AMD Epyc still uses HWLOC_OBJ_GROUP instead of HWLOC_OBJ_DIE and thus needs the previous patch:

Machine (252GB total) Package L#0 Group0 L#0 NUMANode L#0 (P#0 31GB) L3 L#0 (16MB) L2 L#0 (512KB) + L1d L#0 (32KB) + L1i L#0 (32KB) + Core L#0 PU L#0 (P#0) PU L#1 (P#48) [...]

Update 2022

Unfortunately, OpenMPI 5 was still not released and Ubuntu 22.04 thus retains this problem. My binary-patching trick does not work anymore either because the compiler makes some complex optimizations. Therefore, I suggest you use

OMPI_MCA_rmaps_base_mapping_policy=l3cache OMPI_MCA_hwloc_base_binding_policy=l3cacheinstead of

OMPI_MCA_rmaps_base_mapping_policy=numa OMPI_MCA_hwloc_base_binding_policy=numaThis still gives you the benefit of pinning to more than a single core, which gives the kernel some scheduling flexibility.