PPC64, the architecture of the IBM POWER4 through POWER7, is big-endian. The POWER8 through POWER10 also have a little-endian mode, which is why PPC64LE is significantly more common nowadays, even though these newer processors can still switch to big-endian mode. I have a bit of manually-vectorized code that specifically supports POWER7 or newer POWERs running in big-endian mode, so to make sure that still works I occasionally need to emulate a PPC64 Linux. There are not many suitable distributions left — Debian dropped the architecture after Debian 8, Ubuntu after 16.04, Void Linux for PowerPC is discontinued, even Fedora dropped it after Fedora 28. Out of the big distributions, that only leaves CentOS 7, which is getting old and only has one year of support left. However, there is Adélie Linux, a relatively new distribution still in development, and Debian unstable still has a PPC64 port. Read on below for Dockerfiles that you can use to run the two inside Docker on amd64 via multiarch.

Continue readingCategory Archives: Linux

Ubuntu 20.04: OpenMPI bind-to NUMA is broken when running without mpiexec

I tend to set the CPU pinning for my OpenMPI programs to the NUMA node. That way, they always access fast local memory without having to cross between processors. Some recent CPUs like the AMD Ryzen Threadripper have multiple NUMA nodes per socket, so pinning to the socket is not the same thing.

Since upgrading to Ubuntu 20.04, we were seeing error messages like this:

$ python3 -m mpi4py.bench helloworld -------------------------------------------------------------------------- It looks like orte_init failed for some reason; your parallel process is likely to abort. There are many reasons that a parallel process can fail during orte_init; some of which are due to configuration or environment problems. This failure appears to be an internal failure; here's some additional information (which may only be relevant to an Open MPI developer): Setting processor affinity failed failed --> Returned value Error (-1) instead of ORTE_SUCCESS --------------------------------------------------------------------------

Launching through mpiexec/mpirun, even if it was with just one MPI rank, did not show the error:

$ mpiexec -n 1 python3 -m mpi4py.bench helloworld Hello, World! I am process 0 of 1 on host1. $ mpirun -n 1 python3 -m mpi4py.bench helloworld Hello, World! I am process 0 of 1 on host1. $ mpiexec -n 4 python3 -m mpi4py.bench helloworld Hello, World! I am process 3 of 4 on host1. Hello, World! I am process 0 of 4 on host1. Hello, World! I am process 1 of 4 on host1. Hello, World! I am process 2 of 4 on host1.

If you look through the OpenMPI code, you can see that CPU pinning is done by different code depending on whether you run standalone (called singleton mode) or through mpiexec. The relevant bit for the former is in ess_base_fns.c. It searches for a hwloc object of type HWLOC_OBJ_NODE (which is deprecated on the hwloc side and identical to the newer HWLOC_OBJ_NUMANODE). Since hwloc 2.0, NUMA nodes are no longer containers for CPU cores, but exist besides them inside a HWLOC_OBJ_GROUP.

$ lstopo --version

lstopo 1.11.9

$ lstopo --output-format console

Machine (31GB total) + Package L#0

NUMANode L#0 (P#0 16GB)

L3 L#0 (8192KB)

L2 L#0 (512KB) + L1d L#0 (32KB) + L1i L#0 (64KB) + Core L#0

PU L#0 (P#0)

PU L#1 (P#12)

[...]

$ lstopo --version

lstopo 2.1.0

$ lstopo --output-format console

Machine (31GB total) + Package L#0

Group0 L#0

NUMANode L#0 (P#0 16GB)

L3 L#0 (8192KB)

L2 L#0 (512KB) + L1d L#0 (32KB) + L1i L#0 (64KB) + Core L#0

PU L#0 (P#0)

PU L#1 (P#12)

[...]

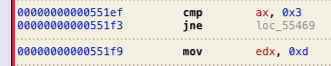

The current OpenMPI master (i.e. versions beyond the 4.1.x series) don’t bind through hwloc anymore, so the issue is fixed upstream (if only by accident). However, we’re stuck with Ubuntu 20.04 for the next two years, so let’s fix it ourselves. We load up the incriminating file, /usr/lib/x86_64-linux-gnu/openmpi/lib/libopen-rte.so.40.20.3, in Hopper and jump to orte_ess_base_proc_binding. Comparing it to its C code quickly reveals the instruction we need to change:

0x3 is OPAL_BIND_TO_NUMA and 0xd is HWLOC_OBJ_NODE. Looking at the hex code tells us that we need to make this change:

- 66 83 F8 03 0F 85 70 02 00 00 BA 0D 00 00 00

+ 66 83 F8 03 0F 85 70 02 00 00 BA 0C 00 00 00

Here’s a bit of Python code to do that:

import mmap

with open("/usr/lib/x86_64-linux-gnu/openmpi/lib/libopen-rte.so.40.20.3", 'r+b') as f:

m = mmap.mmap(f.fileno(), 0, access=mmap.ACCESS_WRITE)

m.seek(m.find(bytes.fromhex("66 83 F8 03 0F 85 70 02 00 00 BA 0D 00 00 00")))

m.write( bytes.fromhex("66 83 F8 03 0F 85 70 02 00 00 BA 0C 00 00 00"))

Update 2021-02-26

The recent kernel update from 5.4.0.65 to 5.4.0.66 switched us from HWLOC_OBJ_GROUP to HWLOC_OBJ_DIE. lstopo now reports

$ lstopo --output-format console Machine (31GB total) + Package L#0 Die L#0 NUMANode L#0 (P#0 16GB) L3 L#0 (8192KB) L2 L#0 (512KB) + L1d L#0 (32KB) + L1i L#0 (64KB) + Core L#0 PU L#0 (P#0) PU L#1 (P#16) [...]

So the patch needs to be modified to have 0x13 in its fourth-to-last byte now.

Update 2021-05-07

The AMD Epyc still uses HWLOC_OBJ_GROUP instead of HWLOC_OBJ_DIE and thus needs the previous patch:

Machine (252GB total) Package L#0 Group0 L#0 NUMANode L#0 (P#0 31GB) L3 L#0 (16MB) L2 L#0 (512KB) + L1d L#0 (32KB) + L1i L#0 (32KB) + Core L#0 PU L#0 (P#0) PU L#1 (P#48) [...]

Update 2022

Unfortunately, OpenMPI 5 was still not released and Ubuntu 22.04 thus retains this problem. My binary-patching trick does not work anymore either because the compiler makes some complex optimizations. Therefore, I suggest you use

OMPI_MCA_rmaps_base_mapping_policy=l3cache OMPI_MCA_hwloc_base_binding_policy=l3cacheinstead of

OMPI_MCA_rmaps_base_mapping_policy=numa OMPI_MCA_hwloc_base_binding_policy=numaThis still gives you the benefit of pinning to more than a single core, which gives the kernel some scheduling flexibility.

Setting up BigBlueButton

Like so many other people, me and most of my two dozen colleagues are currently working from home full-time. While even before the current situation we have always had people work at home for individual days, we didn’t have the infrastructure to replace physical person-to-person communication. The first day was full of phone calls and emails, while our usual video conferencing system DFNconf, provided by the German research network, was struggling to keep up with growing demand. Microsoft Teams was also collapsing under the unexpected load, and I suspect other services like WebEx and Zoom had similar problems. As we might be stuck in this situation for months, we decided to take things into our own hands. For privacy reasons, we wouldn’t be able to use any of these commercial services anyway.

The first step was a chat system. We already have a self-hosted GitLab instance, so switching on Mattermost, an open-source competitor to Slack or Microsoft Teams, was a matter of minutes. Create a DNS record, wait for it to propagate, edit one GitLab config file, and restart GitLab twice.

Next step was video. This is what this article is going to be about. Unlike Microsoft Teams, Mattermost does not have a built-in video conferencing solution. It does have an API that allows third-party software and services to integrate with it. There is a list of video integrations. Our requirements were that it be self-hosted, free, straight forward to set up, and well maintained. That basically led us to BigBlueButton, which can interface to Mattermost via a plugin.

Prerequisites

You need two Linux machines. We have access to an OpenStack cloud provided by the state (bwCloud), so that was easy. The first one, called turn.example.com, has 2 GB RAM, 2 CPU cores, and Ubuntu 18.04. The second one, called video.example.com, has 8 GB RAM, 4 CPU cores and Ubuntu 16.04 (no, that is not a typo). Once the machines are running, set up DNS records for IPv4 and IPv6 and wait until they propagate. Then, SSH into each of them and get them prepared:

sudo hostnamectl set-hostname XXX.example.com sudo apt-get update sudo apt-get install language-pack-en sudo systemctl set-environment LANG=en_US.UTF-8 sudo apt-get upgrade sudo apt-get dist-upgrade sudo reboot

While that is running, configure your cloud provider’s firewall rules. turn.example.com needs incoming IPv4 and IPv6 access for tcp/80, tcpudp/3478, tcpudp/443, udp/49152-65535, while video.example.com needs tcp/80, tcp/443, udp/16384-32768.

Setting up the TURN server

SSH into turn.example.com and run

wget -qO- https://ubuntu.bigbluebutton.org/bbb-install.sh | sudo bash -s -- \ -c turn.example.com:YYYYYYYY -e you@example.com

Instead of the placeholder YYYYYYYY, you should use a random token. You’ll need it again in the next section to connect BigBlueButton to your TURN server.

Setting up BigBlueButton

SSH into video.example.com and run

wget -qO- https://ubuntu.bigbluebutton.org/bbb-install.sh | sudo bash -s -- \ -v xenial-22 -s video.example.com -e you@example.com \ -c turn.example.com:YYYYYYYY sudo apt-get install bbb-webhooks sudo bbb-conf --stop sudo sed -i 's/allowStartStopRecording=./allowStartStopRecording=false/g' \ /usr/share/bbb-web/WEB-INF/classes/bigbluebutton.properties sudo sed -i 's/disableRecordingDefault=./disableRecordingDefault=true/g' \ /usr/share/bbb-web/WEB-INF/classes/bigbluebutton.properties sudo bbb-conf --start sudo bbb-conf --secret

The last command will print out a URL and a secret. Save these for later; you’ll need them to integrate with Mattermost. If you don’t have Mattermost, add -g to the third line and the installer will install the Greenlight management UI for you.

Integrating Mattermost and BigBlueButton

Download the latest release from the GitHub repo. Go to your Mattermost system console, go to Plugin Management and upload the file. Refresh the page and go to the plugin’s settings. Make sure the plugin is disabled, paste the URL (https://video.example.com/bigbluebutton/api) and secret from the previous step, and click Save. Then, enable the plugin and click Save again.

That’s it, you now have a video button at the top of every Mattermost conversation. Click it in a direct message or channel and it will post an invitation link. Everyone can click it to join. The plugin will always show the names of the people that have joined a conference. There’s an end meeting button, but the conference will automatically end a few minutes after the last person has left.

Configuring Phone Dial-in

On video.example.com, configure FreeSWITCH to route incoming calls by creating /opt/freeswitch/etc/freeswitch/dialplan/public/dialin.xml with the following contents:

<extension name="from_my_provider">

<condition field="destination_number" expression="^ZZZZZZZZZZ">

<action application="answer"/>

<action application="sleep" data="500"/>

<action application="play_and_get_digits" data="5 5 3 7000 # conference/conf-pin.wav ivr/ivr-that_was_an_invalid_entry.wav pin \d+"/>

<action application="transfer" data="SEND_TO_CONFERENCE XML public"/>

</condition>

</extension>

<extension name="check_if_conference_active">

<condition field="${conference ${pin} list}" expression="/sofia/g" />

<condition field="destination_number" expression="^SEND_TO_CONFERENCE$">

<action application="set" data="bbb_authorized=true"/>

<action application="transfer" data="${pin} XML default"/>

</condition>

</extension>

Configure your SIP PBX/provider to route calls for your number (assumed to be +49 711 12345678 in the following) to sip:ZZZZZZZZZZ@video.example.com;transport=tcp without registration. ZZZZZZZZZZ is a secret token and you should pick a random one.

In /usr/share/bbb-web/WEB-INF/classes/bigbluebutton.properties on video.example.com, set

defaultDialAccessNumber=+49-711-12345678 defaultWelcomeMessageFooter=<br><br>To join this meeting by phone, dial:<br> %%DIALNUM%%<br>Then enter %%CONFNUM%% as the conference PIN number.<br>Note that this will only work once at least one person has joined the audio bridge from their computer.<br>You can mute and unmute yourself by pushing 0.

and restart BigBlueButton (sudo bbb-conf --stop && sudo bbb-conf --start). Open tcp/5060 in the firewall and you are ready.

Using Greenlight

If you are using Greenlight instead of Mattermost to manage your conferences, it will be available at https://video.example.com. I have not tried it, so I am leaving you to read the documentation yourself.

Conclusion

We decided to do this on Monday around noon (day one of the semi-lockdown) and I sent out the announcement email to my colleagues just four hours later. In other words, BigBlueButton is really easy to set up, thanks to bbb-install.

Today is day four. So far all our meetings were small (< 5 people), but BigBlueButton is extremely light on server resources. Audio quality is great (though the noise gate is a bit aggressive sometimes). Screen sharing and webcam streaming work well, even from networks with firewalls that block all UDP traffic. Firefox, Chrome and Safari work equally well, the only thing that is currently missing is screen sharing from Safari. The server mainly expends CPU time for mixing the audio conferences (extrapolating suggests we can handle at least 20 participants, probably more), while all video is just relayed to the other participants. That means that your server needs enough bandwidth for every participant to exchange ~500 Kbit/s with every other participant if everyone has their camera enabled. Your clients need 500 Kbit/s upstream total and 500 Kbit/s downstream for every other participant.

Week 2 Update (2020-03-24)

Today we had our first bigger video meeting. Ten people with audio, five with video and the server was operating at around 50% of one CPU core. Three problems were discovered:

- Safari cannot send video if it is behind a firewall that blocks UDP, producing an error 1020. Judging from packet captures, it does not appear to fall back to the TURN server.

Solution: use Chrome or Firefox. - Firefox cannot send audio if ICE is disabled. uBlock and some other privacy addons might cause that. Go to about:config and check whether any of the

media.peerconnection.*settings have been modified from their defaults (are displayed in boldface).

Solution: disable the addons and return these settings to their default. If it works after that, you might re-enable the addons and whitelist your server. - Some people don’t have headsets. Their microphones pick up ambient noise, overdrive, feed back, etc. and make audio a pain to listen to.

Solution: get a USB headset. I have a Plantronics Blackwire C320 (mainly because it is compatible with my desk phone), which is a few years old and no longer sold, but you can buy its successor, the Plantronics Blackwire 3220. It’s cheap (around 30 Euros) and good enough for someone like me who only needs it for an hour or two per day. Of course, they are sold out everywhere, so be prepared to wait for multiple weeks to get yours delivered. Until then, use your smartphone headset as it’s still better than your computer’s built-in microphone, or dial into the conference via telephone.

Week 6 Update (2020-04-23)

We have had a few minor complaints about audio quality, mainly in direct comparison to Webex and Zoom. These seem to do better echo cancellation, apply some kind of magic audio processing that makes built-in microphones not sound as terrible, and have (better) packet loss concealment. Still, considering its price, privacy, and ease of use, I prefer BBB.

There is a more significant audio issue in BBB (#7007) though where you get occasional drops and crackles for no apparent reason. I set use-dtx=0, jitterbuffer=60, and energy-level=50 as suggested there and it gets a bit better, but there is still room for improvement. Hopefully that will be resolved by the BBB developers soon.

Today I updated to the latest version of BigBlueButton. It is as simple as running bbb-install again and only takes a minute or two.

OpenWRT on AVM Fritz!Box 3370

I was looking for a new DSL modem and router as I am switching from cable to VDSL2. I was eyeing the Ubiquiti EdgeRouter series for a while because they have a big feature set at a reasonable price. I was a bit reluctant about the X series as they seem to be a bit troubled by their small flash memory and the Lite doesn’t have an SFP slot, which would have been nice for the fibre-to-the-home future. Also, both the X and the Lite have been available for quite a few years now, so I’m not sure how long they would have remained in firmware support. The 4 is much more expensive however, and I’d still need a VDSL2 modem, which seems to cost around 100€ (e.g. Draytek Vigor 130 or Allnet ALL-BM200VDSL2V).

Of course, I could have gotten an off-the-shelf router with an integrated modem, like the AVM Fritz!Box series that’s very popular in Germany and probably paid less in total (standalone VDSL2 modems are rather expensive because not many people want/need them). I had a Fritz!Box on cable for the past few years and am not particularly happy with the quality of their firmware though. The hardware is great, however.

So I decided to go with OpenWRT. The only built-in DSL modems it supports are Lantiq chips, so it had to be one from that list. I wanted something that has at least 64 MB of flash memory (so I could install some extra packages) and Gigabit Ethernet on all four ports. Luckily, OpenWRT recently got full support for the AVM Fritz!Box 3370. AVM announced that model back in 2010 and dropped official support for it in 2015, so they are available for ~25€ on eBay nowadays. Other models that would have been nice but are not currently supported by OpenWRT are the 3390 (simultaneous dual-band WiFi), and 3490/7490 (USB 3.0, 802.11ac, 512 MB flash memory and 256 MB RAM; the 7490 additionally has phone ports which can’t be used with OpenWRT). The ZyXEL P-2812HNU-F1 and P-2812HNU-F3 are quite similar to the AVM 3370, but they are not as readily available on eBay and tend to cost about twice as much.

OpenWRT doesn’t provide too much information on how to install, but it’s quite straight-forward. First, you need to check if your device is at least revision 2 and that it doesn’t have a certain bad bootloader version that makes installation more difficult. Go to http://192.168.178.1/support.lua on the original firmware, log in and click “Support-Daten erstellen”. In the resulting file, you should see something like the following at the top:

HWRevision 175 HWSubRevision 5 ProductID Fritz_Box_3370 [...] urlader-version 2475

Also, we need to know what kind of flash memory chip the device has. Scroll down to ##### BEGIN SECTION dmesg and look for something like

[ 1.450000] [HSNAND] Hardware-ECC activated [ 1.450000] NAND device: Manufacturer ID: 0x2c, Chip ID: 0xf1 (Micron NAND 128MiB 3,3V 8-bit)

Download the files corresponding to your flash chip. Set your IP address statically to 192.168.178.20/24 and reboot the router. When the ethernet interface comes up after a few seconds, ftp 192.168.178.1 and upload the firmware as documented by OpenWRT:

quote USER adam2 quote PASS adam2 binary debug passive quote SETENV linux_fs_start 0 quote MEDIA FLSH put openwrt-lantiq-xrx200-avm_fritz3370-rev2-micron-squashfs-eva-kernel.bin mtd1 put openwrt-lantiq-xrx200-avm_fritz3370-rev2-micron-squashfs-eva-filesystem.bin mtd0 quote REBOOT

OpenWRT is now ready at 192.168.1.1 after a few minutes.

Note that Fritz!Box 3370 support is not in the 18.06 release version, only in the current snapshot builds. This means that the luci web interface is not pre-installed and you should only install new packages within the first few days after flashing (so you don’t pick up newer packages incompatible with your firmware).

After using the 3370 for a little while, unfortunately I noticed that it doesn’t handle more than around 60 Mbit/s. So be warned that it might not exhaust a 100 Mbit/s down, 50 Mbit/s up line. This seems to be due to a slightly underpowered CPU.

Root disk spindown on Debian 9

I recently installed Debian 9 on a Seagate PersonalCloud. Because the device will only get backed up to once every day, I want its disk to be spun down when it’s not needed. Even on a minimal install, you’ll find quite a few background services that access the root disk every few minutes. Here is what I had to do to keep my disk spun down.

hdparm

First, install hdparm (apt-get install hdparm) and configure /etc/hdparm.conf to spin down your disks after 10 minutes:

#quiet spindown_time = 120

Since hdparm isn’t available in the initrd, when the rule /lib/udev/rules.d/85-hdparm.rules fires, you need to add /etc/systemd/system/hdparm-sda.service:

[Unit] Description=hdparm sda ConditionPathExists=/lib/udev/hdparm [Service] Type=forking Environment=DEVNAME=/dev/sda ExecStart=/lib/udev/hdparm TimeoutSec=0 StandardOutput=tty RemainAfterExit=yes [Install] WantedBy=multi-user.target

Now systemctl daemon-reload && systemctl enable hdparm-sda && systemctl start hdparm-sda.

cron.hourly

When you look into /var/log/syslog, you see messages like

CRON[393]: (root) CMD ( cd / && run-parts --report /etc/cron.hourly)

Of course, we can’t have these messages written to a log if we want the disk to remain spun down, so edit /etc/crontab and comment out the hourly job. As long as /etc/cron.hourly is empty, this will not do any harm. If you have any hourly jobs, you might want to move them to daily jobs.

systemctl timers

Systemctl has its own cron-like timer mechanism. You can view active timers with systemctl list-timers --all and disable ones you don’t need, especially ones that run more than once a day. systemctl disable snapper-timeline.timer && systemctl stop snapper-timeline.timer.

smartd

If you have smartmontools installed (apt-get install smartmontools), you’ll see lines like the following appear in the syslog when the disk is spun down:

smartd[294]: Device: /dev/sda [SAT], is in STANDBY mode, suspending checks

Writing these messages causes the disk to spin up, so we need to disable smartd: systemctl stop smartd && systemctl disable smartd. To keep monitoring our disks, put the following into /etc/cron.daily/smart-check and then chmod +x /etc/cron.daily/smart-check:

#!/bin/bash /usr/sbin/smartctl -q errorsonly -A /dev/sda

systemd-tmpfiles-clean

When you run

inotifywait -m -r -e access -e modify -e create -e delete --timefmt '%c' --format '%T PATH:%w%f EVENTS:%,e' --exclude '/(dev/pts|proc|sys|run)' /

to see what is going on on your disk, you’ll see that your temporary directories are being cleaned every couple of minutes. We can reduce that to bi-weekly by running systemctl edit systemd-tmpfiles-clean.timer and pasting

[Timer] OnBootSec=5min OnUnitActiveSec=14d

postfix

If you have Postfix installed, inotify will also show you that it periodically checks its queue directories. So uninstall postfix (apt-get remove postfix) and configure a forwarding MTA that does not run as a daemon.

systemd-timesync

This last one was tricky to discover because it doesn’t appear in the logs and inotify doesn’t see it. You can logging of every disk access to the kernel log to see even more, but you need to disable syslog, otherwise you’ll get a self-amplifying write cascade.

systemctl stop syslog.socket systemctl stop rsyslog.service dmesg -C echo 1 | sudo tee /proc/sys/vm/block_dump dmesg -Tw

Here you’ll see that systemd-timesyncd stores its last sync date by touching a file. It only changes metadata, which is why inotify doesn’t see it happening. My solution was to put the following into /etc/tmpfiles.d/zz-clock.conf:

d /run/systemd/timesync 0755 systemd-timesync systemd-timesync - - f /run/systemd/clock 0644 systemd-timesync systemd-timesync - - f /run/systemd/timesync/clock 0644 systemd-timesync systemd-timesync - - L+ /var/lib/systemd/timesync/clock - - - - /run/systemd/timesync/clock L+ /var/lib/systemd/clock - - - - /run/systemd/clock

/var/lib/systemd/clock is used by systemd 234 and lower, while /var/lib/systemd/timesync/clock is used by systemd 235 and higher. So the latter will only be needed once you upgrade to Debian 10.

AMD Ryzen Threadripper: NUMA architecture, CPU affinity and HTCondor

At the university lab I work at, we usually get desktop computers with Intel Core i7 Extreme CPUs. They have more memory controllers and more cores than Intel’s mainstream CPUs, which is great for us because we do software development and simulations on these machines. Now that it was time to order some new machines, we decided to check out what AMD offers. The last time AMD’s Athlon series was competitive with Intel in the high-end desktop market was probably in the days before the Core i series was introduced a decade ago. Even in the server/HPC market, AMD’s Opteron series hadn’t been a serious Intel competitor after 2012. Now with the Ryzen, AMD finally has something that beats Intel’s high-end offerings both in absolute price and in price per performance.

Today’s high-end CPU market

As prices on Intel’s high-end chips seem to have been increasing with every generation and after Intel didn’t handle the Spectre/Meltdown disaster very well at all earlier this year, we decided that it really was time to break Intel dominance in our lab. I would actually have liked to get something with a non-x86 architecture (because why not), but the requirement of eight CPU cores and four DDR4 memory channels ruled out pretty much everything. The remaining ones were either eliminated based on price (the IBM POWER9 CPU as in the Talos II) or because they are not available in the market (ARM in the form of the Cavium ThunderX2 or Qualcomm Centriq). The POWER9 and ThunderX2 actually have eight memory channels (the Talos’s mainboard only provides access to four though), as does the AMD Epyc (the server version of the Threadripper), so they or their successors might still become interesting options in the future. For comparison, Intel’s current Xeon Scalable series only has six channels, as does the Centriq.

The AMD Ryzen family

We decided to order a Threadripper 1950X with 16 cores. When I unpacked it and started my first benchmark simulation, I was pretty disappointed by the performance though. It turned out that the Threadripper is a NUMA architecture, but you need to toggle a BIOS option (set Memory Interleave to Channel) before it actually presents itself as such to the operating system. In its default mode, memory latencies are very high because a process might be running on a CPU core that is using memory on the other pair of memory controllers. The topology it presents in NUMA mode is roughly like this: out of the 16 cores, four cores each share a common L3 cache to make what AMD calls a core complex (CCX). Two CCXes together share a two-channel memory controller and sit on the same die. Two dies are interconnected with a 50 GB/s link. In NUMA mode and after setting the correct CPU affinity on my simulation processes (which is what the remaining sections of this blog post will be about), I was getting the expected performance — and as you might have expected, the Threadripper really is powerful. You can get something comparable from Intel, the Core i9 Extreme, but these chips are extreme not only in name but also in price. Also, I love the simplicity of AMD’s product lineup: the Ryzen series has two memory channels and 4-8 cores (i.e. one CCX), the Ryzen Threadripper has four memory channels and 8-16 cores (two CCX) and the Epyc has eight memory channels and up to 32 cores. Don’t bother with the second-generation Threadripper that were just announced: the 2920X is almost identical to the 1920X and the 2950X to the 1950X. The 2970WX and 2990WX are really odd chips: they have four CCXes (like the Epyc), but two of them don’t have their own memory controllers. So half of these chips would be as fast as my 1950X in NUMA mode, and the other half would be slower than my 1950X in its default mode.

CPU affinity for MPI

Usually, you only have to worry about CPU pinning and process affinity on servers, high-performance compute clusters and some workstations. Since AMD introduced the Opteron and Intel introduced the Core architecture, machines with multiple processor sockets have been associating memory controllers with CPUs in such a way that memory access within a socket was fast and between sockets was slower. This is called NUMA. To minimize inter-socket memory accesses, you need to tell your operating system’s scheduler to never move threads or processes between cores. This is called pinning or affinity. On servers, it is usually taken care of by the application, while on HPC clusters the admin configures the MPI library to do the right thing. The Threadripper is probably the first chip that brings NUMA to the desktop market.

If you are using OpenMPI, you just need to set two environment variables

export OMPI_MCA_hwloc_base_binding_policy=numa export OMPI_MCA_rmaps_base_mapping_policy=numa

so that processes are pinned (bound) to a NUMA domain (usually that’s a socket, but in the Threadripper it’s a die with two CCXes). The second variable tells OpenMPI to create (map) processes on alternating NUMA domains — so the first one is put on the first die, the second one on the second die, the third one on the first die, and so on.

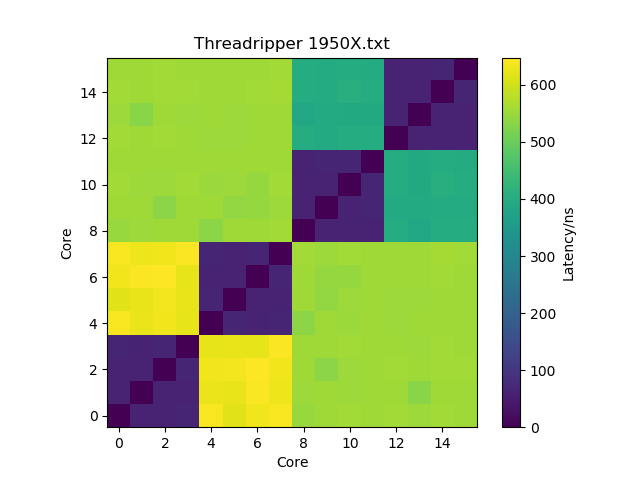

You can also use the -bind-to core and -map-by core command-line arguments to mpiexec/mpirun to achieve the same. Using l3cache instead of numa may actually get you some even better performance because latencies within a CCX are lower than between CCXes within a die, but that only gains you a few percent and requires that you use more processes and less threads, which may be suboptimal for some software. Below is an interesting figure about latency between cores, as measured with this tool (I’m not sure about the yellow squares though — I would have expected them to be more turquoise, and they don’t seem to match what I observe in actual performance).

If you are using Intel MPI (some commercial software we use does that), you only need

export I_MPI_PIN_DOMAIN=numa

to get pinning. The process creation already happens on alternating domains by default.

I like to put these variables in a file in /etc/profile.d so that they are automatically set for everyone who logs into these machines. I didn’t know this before, but both the GNU and the Intel OpenMP library will only create one thread for each core from their affinity mask, so you don’t even need to set OMP_NUM_THREADS=8 manually if you are running hybrid-parallelized codes.

CPU affinity with HTCondor

Our machines run 24 hours a day, 365 days a year. When nobody is using them locally or via SSH, they run simulation jobs via the HTCondor job scheduler. Of course, we want to make good use of the resources with these jobs too, so we need CPU pinning as well. Usually, we set

NUM_SLOTS = 1 NUM_SLOTS_TYPE_1 = 1 SLOT_TYPE_1 = cpus=100% SLOT_TYPE_1_PARTITIONABLE = true

in our Condor configuration so that people can decide themselves how many CPUs they want for their jobs. For the Threadripper, it doesn’t make sense to use more than 8 cores per job because we don’t want jobs to cross NUMA domains. This means we need two slots with 50% of the CPU cores, and we want to set SLOT<n>_CPU_AFFINITY to pin the processes to the die. I wrote a Jinja2 template that creates this Condor configuration:

{%- set nodes = salt.file.find('/sys/devices/system/node', name='node[0-9]*', type='d') -%}

NUM_SLOTS = {{ nodes | count }}

ENFORCE_CPU_AFFINITY = True

{% for node in nodes -%}

NUM_SLOTS_TYPE_{{ loop.index }} = 1

{%- set cpus = salt.file.find(node, name='cpu[0-9]*', type='d') -%}

{%- set physical_cpus = [] -%}

{% for cpu in cpus %}

{%- set cpu_id = cpu.replace(node + '/cpu', '') -%}

{%- set siblings = salt.file.read(cpu + '/topology/thread_siblings_list').strip().split(',') -%}

{% if cpu_id == siblings[0] %}

{%- do physical_cpus.append(cpu) -%}

{% endif -%}

{% endfor %}

SLOT_TYPE_{{ loop.index }} = cpus={{ physical_cpus | count }}

SLOT_TYPE_{{ loop.index }}_PARTITIONABLE = true

{%- set cpu_ids = [] -%}

{% for cpu in cpus %}

{%- do cpu_ids.append(cpu.replace(node + '/cpu', '')) -%}

{% endfor %}

SLOT{{ loop.index }}_CPU_AFFINITY = {{ cpu_ids | join(',')}}

{% endfor -%}

If you don’t have a Jinja2-based configuration manager like SaltStack, you can use the following Python script to render the template:

import os, sys from jinja2 import Environment, FileSystemLoader import salt import salt.modules.file salt.file = salt.modules.file file_loader = FileSystemLoader(os.path.dirname(__file__)) env = Environment(loader=file_loader, extensions=['jinja2.ext.do']) template = env.get_template(sys.argv[1]) template.globals['salt'] = salt print (template.render())

This produces

NUM_SLOTS = 2 ENFORCE_CPU_AFFINITY = True NUM_SLOTS_TYPE_1 = 1 SLOT_TYPE_1 = cpus=8 SLOT_TYPE_1_PARTITIONABLE = true SLOT1_CPU_AFFINITY = 0,1,16,17,18,19,2,20,21,22,23,3,4,5,6,7 NUM_SLOTS_TYPE_2 = 1 SLOT_TYPE_2 = cpus=8 SLOT_TYPE_2_PARTITIONABLE = true SLOT2_CPU_AFFINITY = 10,11,12,13,14,15,24,25,26,27,28,29,30,31,8,9

One additional complication is that some of our users like to set getenv = True in their condor submit file. This means that the variables we set in the global shell profile in the previous section are inherited to the Condor job, overriding the affinity we set for the slots. So we need a job wrapper script that removes these variables if present. Put

#!/bin/bash

for var in $(/usr/bin/env | /bin/grep -E '^(OMPI_|I_MPI_)' | /usr/bin/awk -F = {print $1}'); do

echo "Removing environment variable $var" >&2

unset "$var"

done

exec "$@"

error=$?

echo "Failed to exec($error): $@" > $_CONDOR_WRAPPER_ERROR_FILE

exit 1

into /usr/lib/condor/libexec/condor_pinning_wrapper.sh and add

USER_JOB_WRAPPER = /usr/lib/condor/libexec/condor_pinning_wrapper.sh

to your Condor configuration.

Update 2019-01: Updated the Jinja template to work correctly on non-16-core Threadrippers. These have one (12-core, 24-core) or two (8-core) cores disabled on each CCX, which results in non-consecutive core IDs.

Filtering outgoing traffic with VirtualBox’s NAT interface

Hypervisors like VMware Workstation, VMWare Fusion or VirtualBox usually offer three kinds of network interfaces: bridged (to a network on the host), NAT (sharing an IP address with the host via network address translation) and host-only (a connection exclusively between host and guest).

VMWare, at least on Linux, realizes NAT entirely in the kernel, using standard IP forwarding and setting up a DHCP server on the host that gives addresses to the guest. VirtualBox, on the other hand, handles NAT entirely in user-space, meaning all packets entering and leaving the VM really seem to be going to and from a process named VBoxHeadless or similar.

If you want to limit what kinds of connections a guest system can make, VMware lets you do that quite easily by adding rules to the FORWARD chain:

sudo iptables -I FORWARD -i vmnet8 -j ACCEPT -d github.com sudo iptables -I FORWARD -i vmnet8 -j REJECT

Unfortunately, things are a lot more difficult with VirtualBox. Since there is no dedicated interface, you need to filter based on process. iptables can’t do that directly, but you can use cgroups to add the necessary marks to the packets:

sudo mkdir /sys/fs/cgroup/net_cls/virtualbox echo 86 | sudo tee /sys/fs/cgroup/net_cls/virtualbox/net_cls.classid sudo iptables -N VIRTUALBOX sudo iptables -I OUTPUT -j VIRTUALBOX -m cgroup --cgroup 86 sudo iptables -I VIRTUALBOX -j ACCEPT -d github.com sudo iptables -I VIRTUALBOX -j REJECT

Of course, you now need to create the VirtualBox process inside that cgroup. One way is

sudo apt-get install cgroup-tools sudo cgexec -g net_cls:virtualbox vboxmanage startvm WindowsXP --type=headless

or you can use a wrapper script instead of vboxmanage:

#!/bin/sh -e CGROUP_NAME=virtualbox CGROUP_ID=86 if [ ! -d /sys/fs/cgroup/net_cls/$CGROUP_NAME/ ]; then mkdir /sys/fs/cgroup/net_cls/$CGROUP_NAME echo $CGROUP_ID > /sys/fs/cgroup/net_cls/$CGROUP_NAME/net_cls.classid fi /bin/echo $$ > /sys/fs/cgroup/net_cls/$CGROUP_NAME/tasks exec /usr/bin/vboxmanage "$@"

Using a BIND DNS server in an Active Directory Environment

Years ago, I posted a script that allowed ISC DHCPd to update a Microsoft DNS server with dynamic records for DHCP clients. I haven’t used that method in a long time and there is a much simpler method: use ISC DHCPd together with the BIND DNS server like everybody else does, and only delegate the _mscds and _sites zones from the BIND server to the Microsoft DNS servers:

_msdcs.example.com. 86400 IN NS dc01.example.com.

_msdcs.example.com. 86400 IN NS dc02.example.com.

_sites.example.com. 86400 IN NS dc01.example.com.

_sites.example.com. 86400 IN NS dc02.example.com.

Then on all your machines, use the BIND server as DNS server (typically set via DHCP option 23). For Windows Domain matters, only records below _msdcs and _sites are ever looked up.

I believe you should even be able to point your domain controllers to the BIND DNS server — they should be able to follow the NS record so that whenever they try to update their own records, they do so on the Microsoft DNS server. As it turns out, the RFC 2136 DNS UPDATE method is used when domain controllers try to register their own records, so you’ll see error messages in your logs if you point your domain controllers to the BIND DNS server (on a Microsoft DC, these would refer to NETLOGON and dynamic DNS registrations, while on a Samba DC they would be about samba_dnsupdate). If you are running Samba 4.5 or higher, you should ensure that samba_dnsupdate is called with the –use-samba-tool flag, which can probably be done by setting the option below in your /etc/samba/smb.conf. If you are running an older Samba version or any Windows Server version, you need to resort to using your domain controllers’ IP addresses as DNS servers on on all domain controllers (Samba: put them into /etc/resolv.conf, Windows: set them in the network interface properties).

dns update command = /usr/sbin/samba_dnsupdate --use-samba-tool

For compatibility with Unix clients (including Mac OS X), you’ll want to add a couple of CNAME records for the SRV records:

_ldap._tcp.example.com. 86400 IN CNAME _ldap._tcp.dc._msdcs.example.com.

_gc._tcp.example.com. 86400 IN CNAME _ldap._tcp.gc._msdcs.example.com.

_kerberos._tcp.example.com. 86400 IN CNAME _kerberos._tcp.dc._msdcs.example.com.

_kerberos._udp.example.com. 86400 IN CNAME _kerberos._tcp.dc._msdcs.example.com.

_kpasswd._tcp.example.com. 3600 IN SRV 0 100 464 dc01.example.com

_kpasswd._tcp.example.com. 3600 IN SRV 0 100 464 dc02.example.com

_kpasswd._udp.example.com. 3600 IN SRV 0 100 464 dc01.example.com

_kpasswd._udp.example.com. 3600 IN SRV 0 100 464 dc02.example.com

The _kpasswd records unfortunately can’t be CNAMEs because they don’t exist in the _msdcs branch, so you manually need to keep them up-to-date when you add and remove domain controllers.

Boot a Windows install disc from the network using iPXE and wimboot

A while ago, I showed how you can use a Linux PXE server along with a tool called Serva to PXE boot a Windows installer DVD. By now, there is a much nicer solution available that doesn’t require any Windows tools: iPXE with wimboot. So go ahead and replace your PXELinux setup with iPXE first. Then copy the contents of a Windows installer DVD to your TFTP server and make sure that the folder is also shared read-only via SMB. Now copy the wimboot binary to your TFTP server and add something like the following to your iPXE config file:

set serverip 192.168.200.29

set tftpboot tftp://${serverip}/

set tftpbootpath /mnt/Daten/tftpboot

:menu

menu iPXE boot menu

item --key w win10de Windows 10 16.07 x64 German

choose os

goto ${os}

:win10de

echo Booting Windows Installer...

set root-path ${tftpboot}/ipxe

kernel ${root-path}/wimboot gui

set root-path ${tftpboot}/Win10_1607_German_x64

initrd ${root-path}/boot/bcd BCD

initrd ${root-path}/boot/boot.sdi boot.sdi

initrd ${root-path}/sources/boot.wim boot.wim

initrd ${root-path}/boot/fonts/segmono_boot.ttf segmono_boot.ttf

initrd ${root-path}/boot/fonts/segoe_slboot.ttf segoe_slboot.ttf

initrd ${root-path}/boot/fonts/segoen_slboot.ttf segoen_slboot.ttf

initrd ${root-path}/boot/fonts/wgl4_boot.ttf wgl4_boot.ttf

boot || goto failed

That’s it. When you boot this boot menu entry, you’ll be presented with the Windows installer, but if you click through it, it will at some point ask you for a driver because it can’t find its installer packages. So before you click through, hit Shift-F10 and execute the following commands to set up the network, mount the SMB share and re-execute the installer:

wpeinit

net use s: \\192.168.200.29\tftpboot\Win10_1607_German_x64 bar /user:foo

s:\sources\setup.exe

If your SMB server is running Samba, the user you specify (foo) must not exist on the server so you force it to use anonymous authentication. With a Windows server, things might be different.

That should give you a working installer that will get your Windows running within a few minutes because Gigabit Ethernet has a much bigger bandwidth than a spinning DVD or a cheap USB flash drive.

Serva did have one advantage, however: when you set it up, you could inject network drivers into the boot image. With Windows 10, luckily, that has become a non-issue: Microsoft releases a new installer ISO for it about once a year, which you can directly download and which should contain all drivers for the latest hardware available when it was released.

Wiring Fibre Channel for Arbitrated Loop

We have a small Fibre Channel SAN with three servers, a switch and a dual-controller RAID enclosure. With only a single switch, we obviously couldn’t connect all servers redundantly to the RAID system. That meant, for example, that firmware updates to it could only be applied after shutting down the servers. Buying a second switch was hard to justify for this simple setup, so we decided to hook the switch up to the first RAID controller and wire a loop off the second RAID controller. Each server would have one port connected to the switch and another one to the loop.

Back in the old days, Fibre Channel hubs existed for exactly this purpose, but nowadays you simply can’t get them anymore. However, in a redundant setup, you don’t need a hub, you can simply run cables appropriately, i.e. in a daisy-chain fashion. The only downside of not having a hub, the loop going down if one server goes down, is irrelevant here because you have a second path via the switch. For technical details on the loop topology, you can have a look at documentation available from EMC.

To wire your servers and RAID controllers in a fashion resembling a hub (only without the automatic bypass if a server goes down), you need simplex patch cables. These consist of a single fibre (instead of two, like you are used to) and have a connector that looks like half of a regular LC connector. You can get them in the same qualities as regular (duplex) patch cables and in single- and multi-mode as needed. They look like this:

These cables are somewhat exotic, so your usual cable dealer might not have them, but they exist and are available from specialized fiber cable dealers. As you are wiring in a daisy-chain fashion, you need one simplex cable per node you want to connect.

Once you have the necessary cables, you wire everything by connecting a cable from the output side of the FC port on the first device and connecting it to the input side of the FC port on the second device. The second device’s output side connects to the third device’s input side and so on, until you have made a full loop by connecting the last device’s output side to the first device’s input side, like this:

How can you tell the output side from the input side? Some transceivers have little arrows or have labels “TX” (output) and “RX” (input). If yours don’t, you can recognize the output side by the red laser light coming from it. To protect your eyes, never look directly into the laser light. Never connect two output sides together, otherwise you may damage the laser diodes in both of them. Therefore, be careful and always double-check.

That’s it, you’re done. All servers on the loop should immediately see the LUNs exported to the by the RAID controller.

In my setup however, further troubleshooting was required. The loop simply did not come up. As it turns out, someone had set the ports on one FC HBA to point-to-point-only and 2 Gbit/s mode. After switching both these settings to their default automatic mode, the loop went up and data started flowing. My HBAs are QLogic QLE2462 (4 Gbit/s generation) and QLE2562 (8 Gbit/s generation), and they automatically negotiated the fastest common speed they could handle, which is 4 Gbit/s. Configuring these parameters on the HBA usually requires hitting a key at the HBA’s pre-boot screen to enter the configuration menu and doing it there, or via vendor-specific software. I didn’t have access to the pre-boot configuration menu and was running VMware ESXi 6.0 on the servers. The QConvergeConsole for my QLogic adapters luckily is also available for VMware. It is not as easy to install as on Windows or Linux, unfortunately. You first install a CIM provider via the command line on the ESXi host, reboot the host and then install a plugin into VMware vCenter server.